I am an AI Engineer @ Item and Unisco.

I received my MS ECE degree from the Georgia Institute of Technology  , where my research interests centered on Multimodal Large Language Models (MLLM) and Affective Computing. Previously, I gained industrial algorithm experience as an intern at Tencent and Baidu.

, where my research interests centered on Multimodal Large Language Models (MLLM) and Affective Computing. Previously, I gained industrial algorithm experience as an intern at Tencent and Baidu.

My current work focuses on:

- Graph-based RAG Systems

- Multi-agent Collaboration

- Large Language Models (LLMs) & Multimodal LLMs

You can find me at lum.lin@item.com or lin.yuxiang.contact@gmail.com.

🔥 News

- 2025.09: Started my career as an AI Engineer @ Item and Unisco after graduation.

- 2025.07: Try MER-Factory for automatic construct multimodal emotion recognition and reasoning dataset.

- 2025.06: One paper about Benchmarking VLLM’s Emotion Interpretation ability is accepted by CVPR W, NeXD (1/3 Oral).

- 2025.05: Start my internship at Tencent.

- 2024.12: One paper about Multimodal Large Language Model in Emotion Reasoning is accepted by NeurIPS (CCF rank A).

- 2024.07: One co-first author paper about invisible gas detection is accepted by CVIU (JCR Q1, CCF rank B). 🎉

- 2024.03: One paper about Conversational Emotion-Cause Pair Analysis with LLM is accepted by SemEval 2024, NAACL.

- 2024.01: Start my internship at Baidu.

- 2024.01: I was awarded the First Prize of Research and Innovation Award (3000 CNY) and Star of Craftsmanship (3000 CNY).

- 2023.08: My instance segmentation tutorial has been featured in MMYOLO v0.6.0 highlight! Check out the tutorial here to master the essentials of instance segmentation.

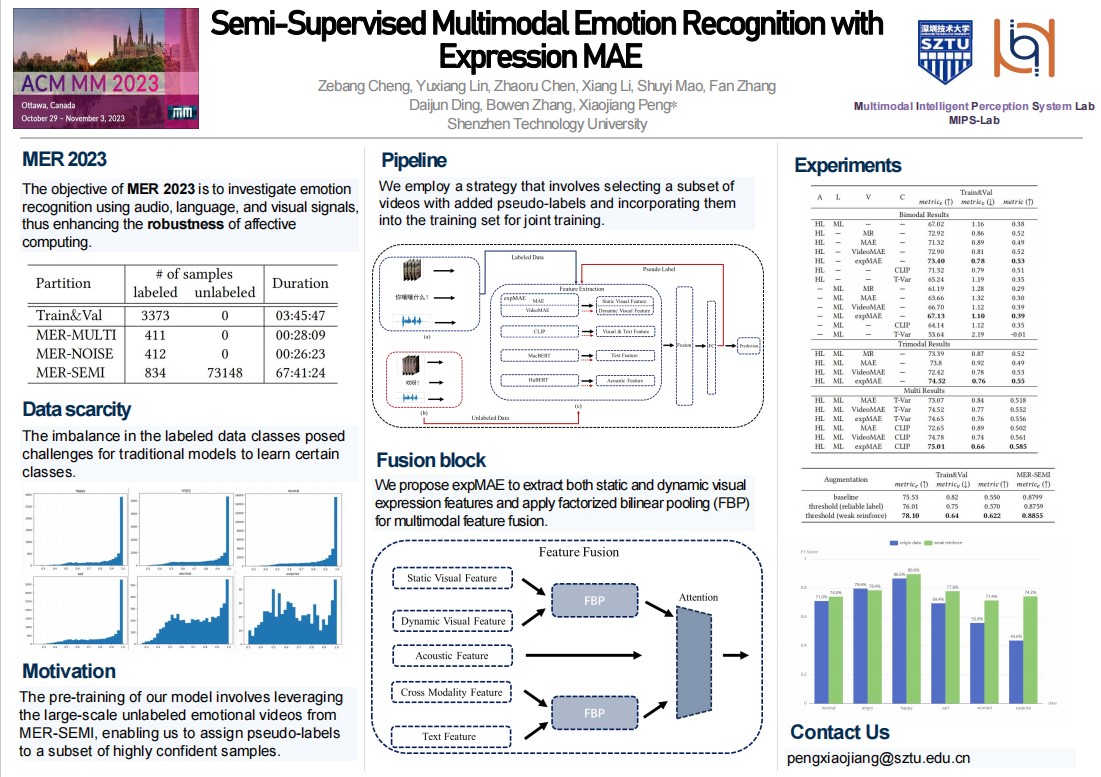

- 2023.07: One paper on multimodal emotion recognition is accepted by ACM MM! 🎉

- 2023.07: We are the runner up in the Grand Challenge (MER 2023) of ACM MM! 🥈

👨💻 LLM-Agent Project

MER-Factory

The first framework for automatically constructing Multimodal Emotion Recognition and Reasoning (MERR) datasets. (To the best of my knowledge)

- Action Unit (AU) Pipeline: Extracts facial Action Units (AUs) and translates them into descriptive natural language.

- Audio Analysis Pipeline: Extracts audio, transcribes speech, and performs detailed tonal analysis.

- Video Analysis Pipeline: Generates comprehensive descriptions of video content and context.

- Image Analysis Pipeline: Provides end-to-end emotion recognition for static images, complete with visual descriptions and emotional synthesis.

- Full MER Pipeline: An end-to-end multimodal pipeline that identifies peak emotional moments, analyzes all modalities (visual, audio, facial), and synthesizes a holistic emotional reasoning summary.

Agent-brainstorm

This system implements a 5-stage brainstorming methodology using multi-AI agents to generate, evaluate, and refine ideas for both project development and research papers. The entire process runs locally with real-time web search integration and ArXiv research capabilities.

📝 Publications

📌 Pinned

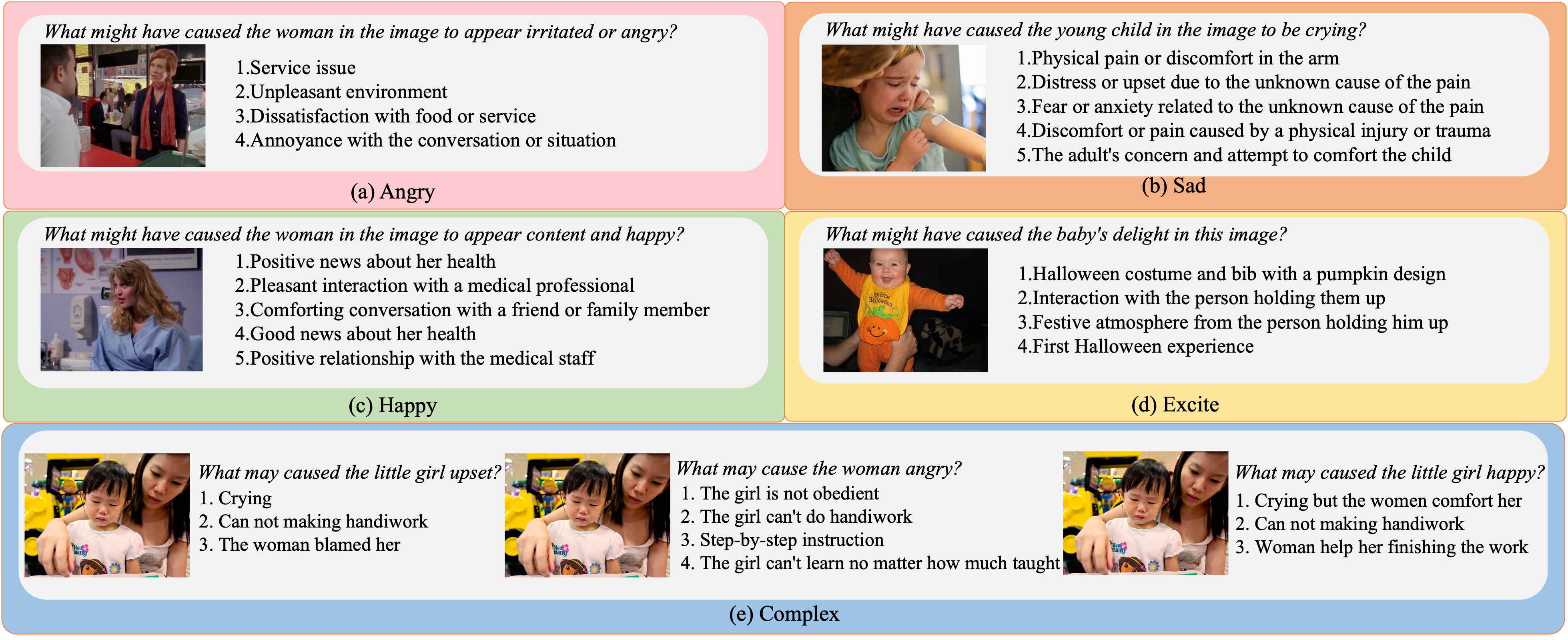

Why We Feel: Breaking Boundaries in Emotional Reasoning with Multimodal Large Language Models

Yuxiang Lin, Jingdong Sun, Zhi-Qi Cheng, Jue Wang, Haomin Liang, Zebang Cheng, Yifei Dong, Jun-Yan He, Xiaojiang Peng, Xian-Sheng Hua

Emotion-llama: Multimodal emotion recognition and reasoning with instruction tuning

Zebang Cheng, Zhi-Qi Cheng, Jun-Yan He, Kai Wang, Yuxiang Lin, Zheng Lian, Xiaojiang Peng, Alexander Hauptmann ($2^{nd}$ student author)

NeurIPS (CCF-A) | [Paper] [Code]

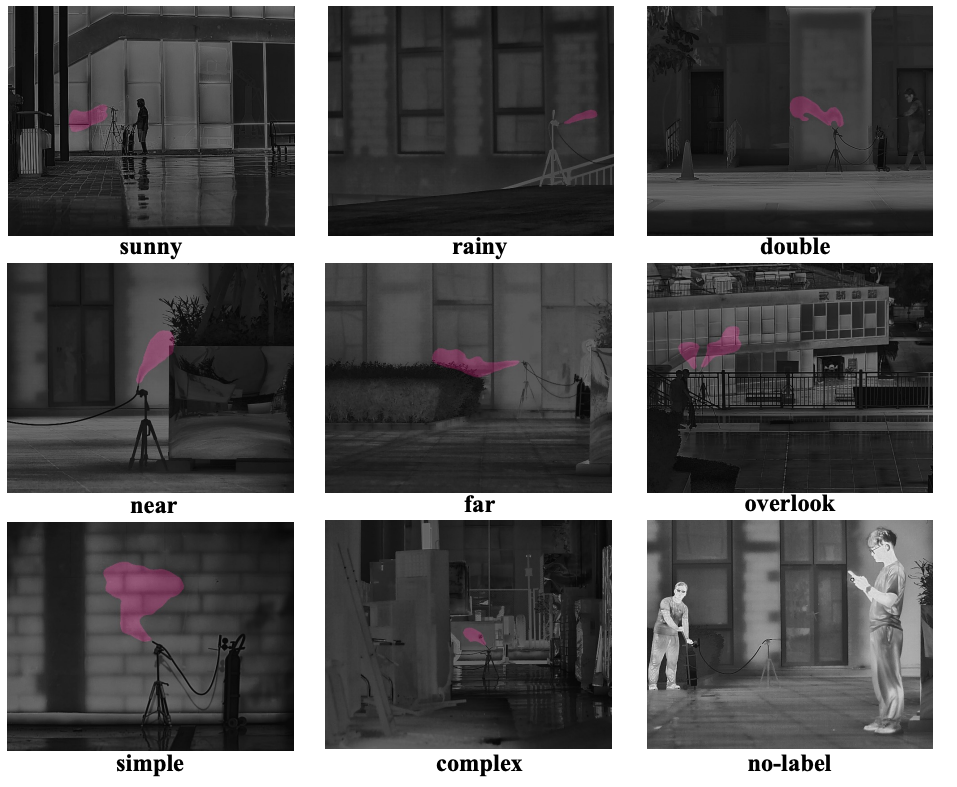

Invisible Gas Detection: An RGB-Thermal Cross Attention Network and A New Benchmark

Jue Wang*, Yuxiang Lin*, Qi Zhao, Dong Luo, Shuaibao Chen, Wei Chen, Xiaojiang Peng (* denotes equal contribution)

Semi-Supervised Multimodal Emotion Recognition with Expression MAE

Zebang Cheng, Yuxiang Lin, Zhaoru Chen, Xiang Li, Shuyi Mao, Fan Zhang, Daijun Ding, Bowen Zhang, Xiaojiang Peng

👨💻 Experience

- 2025.05 - present Summer Intern, Tencent

- 2022.10 - 2024.06 Student Research Fellow, MIPS-Lab

- 2024.01 - 2024.04 Research Intern, Baidu Inc

- 2024.01 - 2024.01 Teaching Assistant, InternLM

- 2023.10 - 2024.01 Research Intern, University of Central Florida (UCF)

- 2023.06 - 2023.07 Teaching Assistant, OpenMMLab

- 2023.02 - 2023.07 Visiting Student, Shenzhen Institute of Advanced Technology, CAS

🏅 Selected Awards

- 2020 Second Prize of SZTU Freshman Scholarship (6000 CNY)

- 2022 China Undergraduate Mathematical Contest in Modeling, National Second Prize (top 2%)

- 2023 Dahua Outstanding Scholarship (4000 CNY)

- 2023 OpenMMLab MMSTAR I

- 2024 First Prize of Research and Innovation Award (3000 CNY)

- 2024 Star of Craftsmanship (3000 CNY)